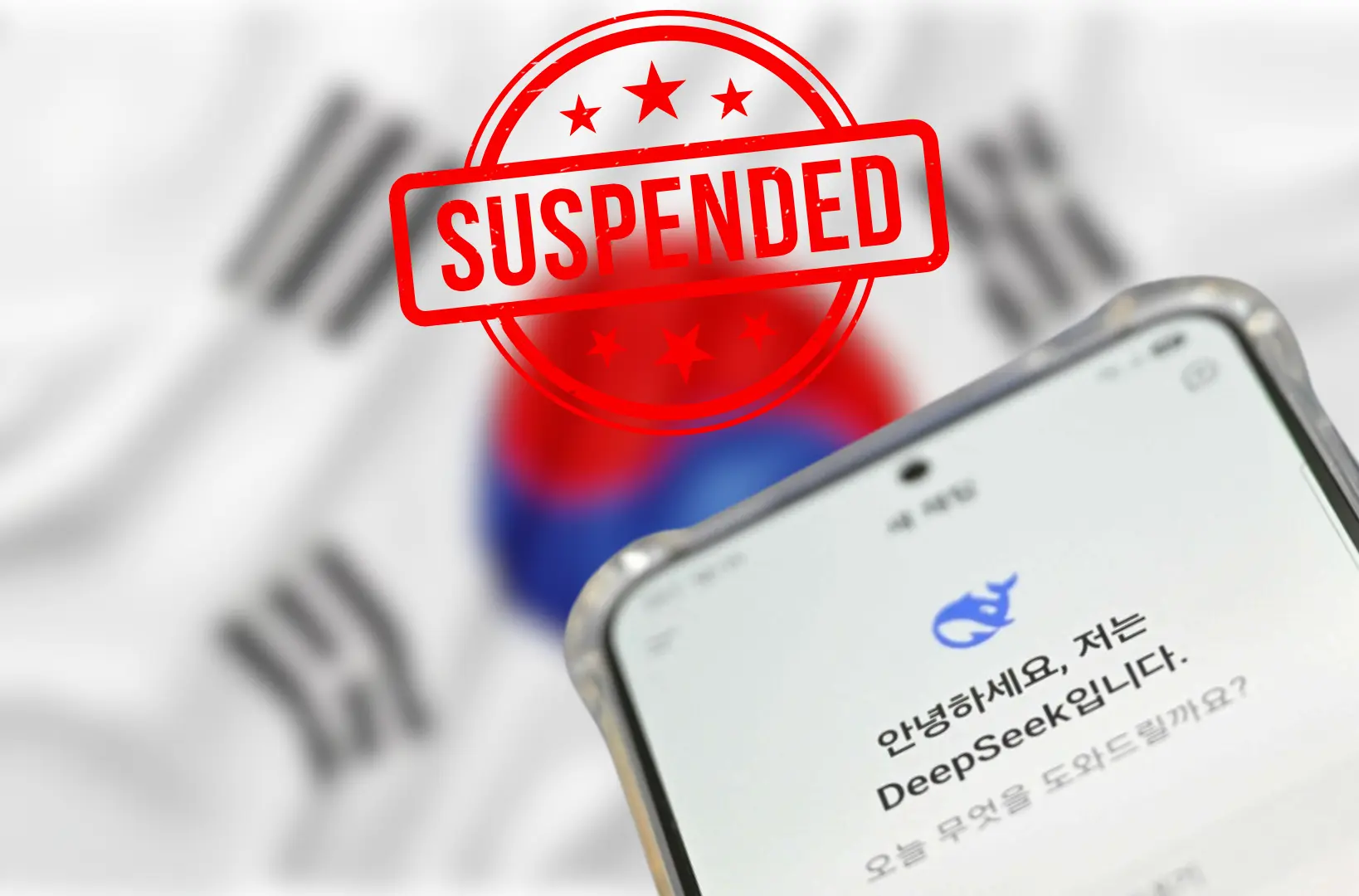

Hey AI enthusiasts! The AI world is buzzing, and not just about the latest breakthroughs. DeepSeek, a promising AI model making waves for its capabilities, has hit a snag. South Korea recently slapped a suspension on DeepSeek downloads, and it’s got everyone talking. This isn’t just a minor hiccup; it raises some serious questions about data privacy and the future of AI regulation. DeepSeek, like many AI systems, relies heavily on user data to learn and improve. However, this reliance has become a point of contention, with the South Korean government expressing concerns about how DeepSeek handles this valuable information. This suspension isn’t just about one company; it’s a signal, a shot across the bow, highlighting the growing tension between rapid AI development and the crucial need to protect user privacy.

So, what’s the big deal? What are the specific concerns? And what does this mean for the future of AI? Let’s dive in and break it down.

What is DeepSeek, Anyway?

DeepSeek, for those who haven’t come across it, is a pretty slick AI model. Think of it like a souped-up version of other AI assistants you might have played around with. It’s designed for a bunch of cool stuff, from coding and writing to who knows what else. The potential is huge, which is why it’s been getting a lot of attention. But with great power comes great responsibility, right? And that’s where the South Korean situation comes in.

The Data Dilemma: The Heart of the Matter

The issue? User data. It’s a hot-button topic in the AI world right now. Basically, the South Korean government is concerned about how DeepSeek handles the data it collects from users. And let’s be real, AI models like DeepSeek thrive on data. They learn and improve by analyzing tons of information, and a lot of that info comes from us, the users.

Think about it: when you chat with an AI, ask it questions, or use it to generate content, you’re feeding it data. This data can include anything from your search queries and writing style to even personal details if you’re not careful. And that’s where the concern lies. What happens to all that data? How is it being used? Is it secure? These are the questions South Korea is asking.

South Korea’s Stance: Protecting User Privacy

Now, it’s not like South Korea is just randomly targeting DeepSeek. They’ve been pretty vocal about the importance of data privacy and security in the AI space. They’ve got regulations and guidelines in place, and they’re serious about enforcing them. This isn’t just about DeepSeek; it’s about setting a precedent for how AI companies operate in South Korea. They want to ensure a safe digital environment for their citizens.

The Specific Concerns: What’s the Worry?

So, what exactly are the South Korean authorities worried about? Well, they haven’t released all the specifics, but it’s likely related to a few key areas:

- Data Collection: How much data is DeepSeek collecting? Are they collecting more than they need? Are they being transparent about what data they’re grabbing? Are they collecting sensitive information without explicit consent?

- Data Usage: What are they doing with the data? Are they using it solely to improve DeepSeek, or are they sharing it with third parties? Are they using it for targeted advertising or other purposes that might be sketchy? Could the data be used for profiling or other potentially harmful activities?

- Data Security: How secure is the data? Is it protected from hackers and unauthorized access? Data breaches are a huge deal, and the government wants to make sure user data is locked down tight. Are they employing robust encryption and security protocols?

- User Consent: Are users being properly informed about how their data is being used? Are they given a real choice about whether or not to share their data? This is all about giving users control over their own information. Are the consent mechanisms clear, understandable, and easily accessible?

The Bigger Picture: Innovation vs. Regulation

This whole situation is a big deal for the AI industry. It highlights the growing tension between innovation and regulation. AI is moving super fast, and governments are scrambling to keep up. They want to encourage AI development, but they also need to protect their citizens’ data. It’s a tricky balancing act. Finding the right balance is crucial for fostering innovation while safeguarding individual rights.

The Impact on DeepSeek and the Industry

For DeepSeek, this download suspension is a major setback. It not only affects their user base in South Korea but also sends a message to other countries. It basically says, “Hey, if you’re not playing by the rules, we’re not afraid to take action.” This could lead to other countries following suit, which would definitely put pressure on AI companies to be more transparent and responsible with data. This could potentially create a domino effect, forcing the entire industry to re-evaluate its data practices.

A Silver Lining? Potential Benefits

But it’s not all doom and gloom. This could actually be a good thing in the long run. It could force AI companies to prioritize data privacy and security from the get-go, rather than treating it as an afterthought. It could also lead to the development of better data governance frameworks and regulations, which would benefit both users and AI developers.

Think about it: if users feel confident that their data is being handled responsibly, they’ll be more likely to use AI products and services. That’s a win-win for everyone. AI companies can thrive, and users can enjoy the benefits of AI without worrying about their privacy being violated. This increased trust can lead to wider adoption and acceptance of AI technologies.

The Future of AI: A Call for Responsibility

So, what’s next? Well, DeepSeek is likely working hard to address the South Korean government’s concerns. They might need to make some changes to their data collection, usage, and security practices. They might also need to be more transparent about how they handle user data. It’s a learning process for everyone involved. Open communication and collaboration between AI developers and regulators are essential for navigating these challenges.

This whole situation is a reminder that AI is not just about cool technology; it’s also about ethics, responsibility, and the impact on society. As AI enthusiasts, we need to be part of the conversation. We need to ask tough questions and demand accountability from AI companies. We need to make sure that AI is developed and used in a way that benefits everyone, not just a select few. We must advocate for responsible AI development that respects human rights and values.

Keep your eyes peeled on this story. It’s still unfolding, and it’s bound to have a big impact on the future of AI. Let’s keep the conversation going! What do you guys think about the whole DeepSeek situation?

Hit me up in the comments! Let’s discuss the implications of this decision and how we can ensure a future where AI benefits all of humanity.

Suggested Reads:

Adobe’s Firefly AI Video Generator Rivals OpenAI’s Sora

Galaxy S25 vs. iPhone 16 – Which Compact Flagship is Best?

Apple Raises Concerns Over First Porn App on iPhone Under EU Rules

Burhan Ahmad is a Senior Content Editor at Technado, with a strong focus on tech, software development, cybersecurity, and digital marketing. He has previously contributed to leading digital platforms, delivering insightful content in these areas.